What model should I use to forecast…?

Finding the best forecasting model for your data can feel like finding a needle in a haystack. The instinct to copy a model from a research article is understandable, but it is risky. What performs best on one dataset often underperforms on another, a reality formalized by the “no free lunch” results in optimization and learning that show there is no universally superior algorithm across problems (Wolpert and Macready 1997). In empirical forecasting, the landmark M competitions reached the same practical conclusion at scale: on 100,000 series, no single method dominated and combinations tended to win (Makridakis et al. 2020, M4). In other words, you will never know if there is a 10 percent or larger accuracy gain available unless you test alternatives on your data.

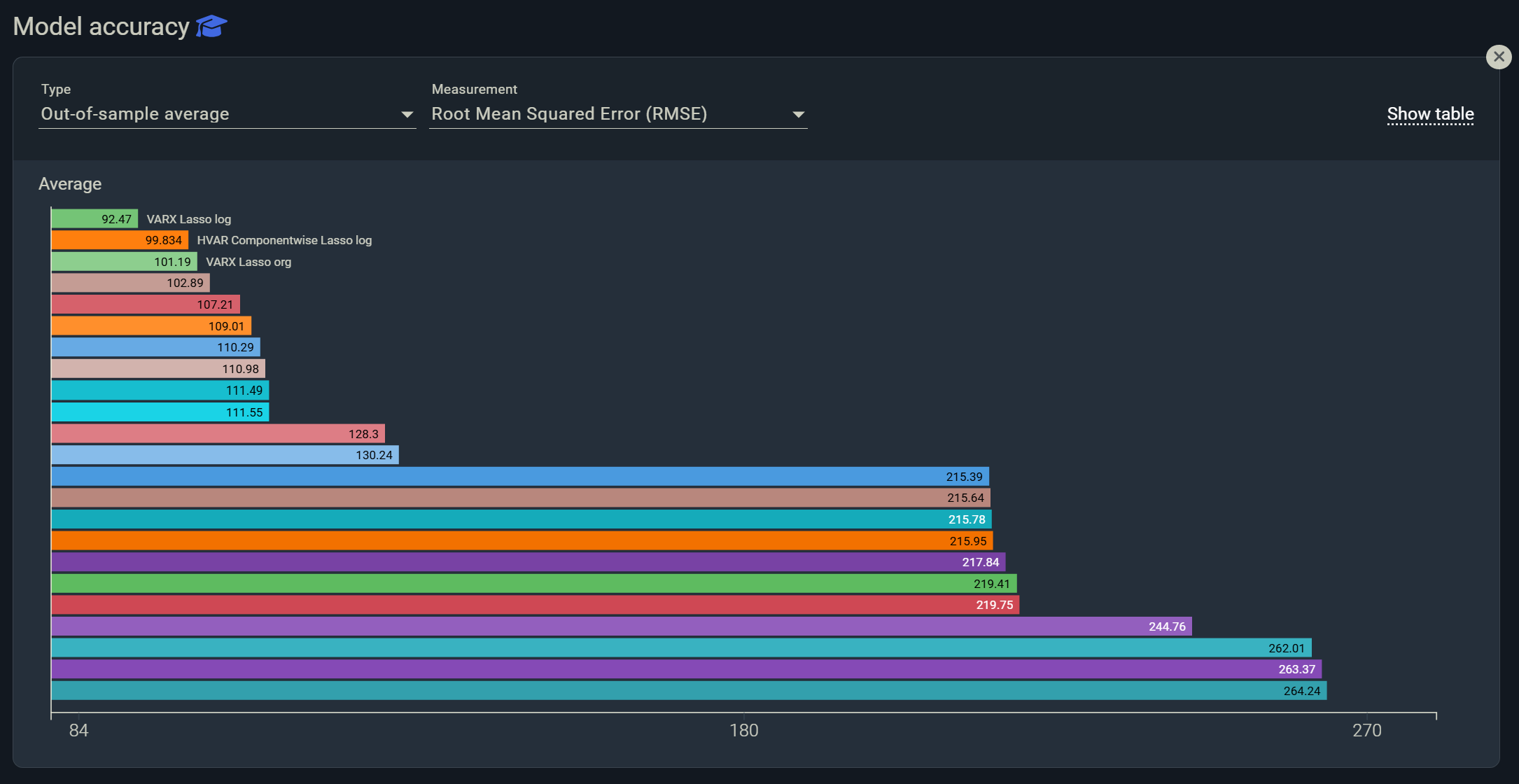

Modern tooling makes that feasible. Automated platforms now benchmark broad libraries of statistical, econometric, and machine learning models on your series, then combine the best performers. Feature-based meta-learners such as FFORMA learn when to weight which method, improving accuracy versus choosing a single model (Montero-Manso et al. 2020). Time-series cross-validation with a rolling origin provides the rigorous out-of-sample yardstick to compare them fairly (Hyndman, FPP3 §5.10; Hyndman blog).

Why replicating a paper is not enough

Academic precedents are valuable, yet they are conditioned on time span, sampling frequency, regime, and data preprocessing. The M4 findings explicitly document large heterogeneity across series and show that ensembles, not single models, led the leaderboard (Makridakis et al. 2020). Even simple combinations can rank near the top, illustrating the power of averaging uncorrelated model errors (Shaub 2020). The principle is old and sturdy: combining forecasts can reduce mean square error relative to any constituent model (Bates and Granger 1969).

What the evidence says you should do

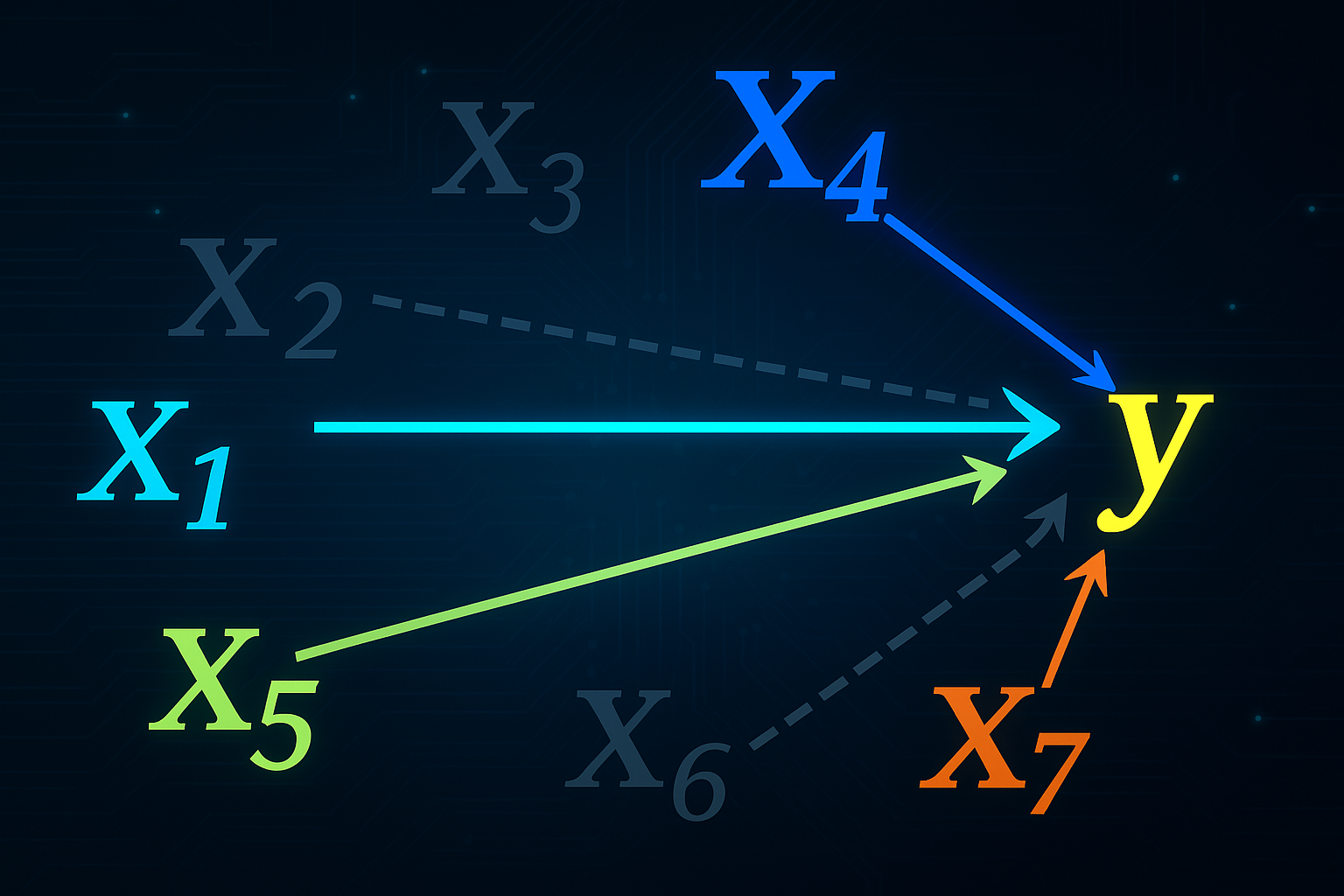

- Benchmark many models, not one:

Evaluate diverse classes, for example ETS, ARIMA, dynamic regression, TBATS, state-space, gradient boosting, random forests, and specialized intermittent-demand methods. Large-scale evaluations in M4 show that breadth pays off and that the top approach varies by series traits and horizon (Makridakis et al. 2020). - Use combinations or meta-learning:

Weight top models rather than selecting a single winner. FFORMA learns weights from time-series features, outperforming one-model selection on average (Montero-Manso et al. 2020). This operationalizes the combination logic behind classic results (Bates and Granger 1969). - Validate with rolling-origin CV:

Adopt evaluation on a moving forecast origin to mirror production use and to score multi-step accuracy robustly (FPP3 §5.10; Hyndman blog). - Let the data characteristics guide you:

Seasonality strength, trend, intermittency, and frequency should inform the candidate set and loss function. A practitioner-friendly reference is the open textbook Forecasting: Principles and Practice (FPP3).

Why automation changes the economics

Historically, implementing “all” models was cost-prohibitive. Today, automated forecasting systems orchestrate the heavy lifting, from feature engineering and hyperparameter search to backtesting and ensembling. For example, Indicio provides a no-code interface that runs a large library of econometric, statistical, and machine-learning models, applies automated variable selection, and ranks or combines them via backtesting in minutes. The vendor reports a minimum 30 percent improvement in forecast accuracy and fast, no-code workflows on real-world datasets (Indicio overview; Indicio feature page). While results will depend on your data, this aligns with competition evidence that broad, well-validated, and combined model sets outperform narrow, manually curated ones (Makridakis et al. 2020; Montero-Manso et al. 2020).

A pragmatic playbook to become your team’s “super-forecaster”

- Define decision-first metrics:

Optimize MAE, RMSE, MAPE, or pinball loss by horizon, tied to business actions, and complement with calibration of prediction intervals as needed (FPP3). - Cast a wide net of candidates fast:

Generate a portfolio spanning exponential smoothing, ARIMA variants, regressors with exogenous drivers, and ML models that capture nonlinearity. Evidence from M competitions supports wide exploration and combinations (Makridakis et al. 2020). - Automate selection and weighting:

Use meta-learning or stacking to pick and weight models by series features, as in FFORMA, rather than hard-picking a single model (Montero-Manso et al. 2020). - Backtest rigorously, deploy simply:

Adopt rolling-origin cross-validation and guard against overfitting with out-of-time tests before promoting new champions (FPP3 §5.10). - Operationalize for scale:

Prefer platforms that are no-code and auditable so economists, planners, and analysts can all run benchmarks quickly and trace decisions. Indicio is one example focused on backtested, multi-model pipelines and variable selection for practical deployment (Indicio overview).

Bottom line

The best model is the one that wins on your data under your loss function, not the one someone else used. The empirical record, from Bates and Granger through M4 and FFORMA, supports broad model benchmarking, combinations, and rigorous rolling-origin testing. With modern automation you can run that process in minutes, not months, and it is entirely realistic to report double-digit accuracy improvements when moving from a narrow, handpicked set to a validated, combined portfolio of models (Makridakis et al. 2020; Montero-Manso et al. 2020; Wolpert and Macready 1997; Hyndman, FPP3). If you want the fastest path from “which model?” to “we improved accuracy by 30 percent,” adopting an automated platform like Indicio is a pragmatic, evidence-consistent choice (Indicio overview).