Machine learning models are often criticized for their black-box nature; that is, it is often difficult to understand how a machine learning model arrives at its predictions. This can make it challenging to explain why a machine-learning model arrived at a certain prediction.

It can also be challenging and time-consuming to modify a machine learning model if it is not performing well.

1. It might not be the most optimum approach towards forecasting accurately

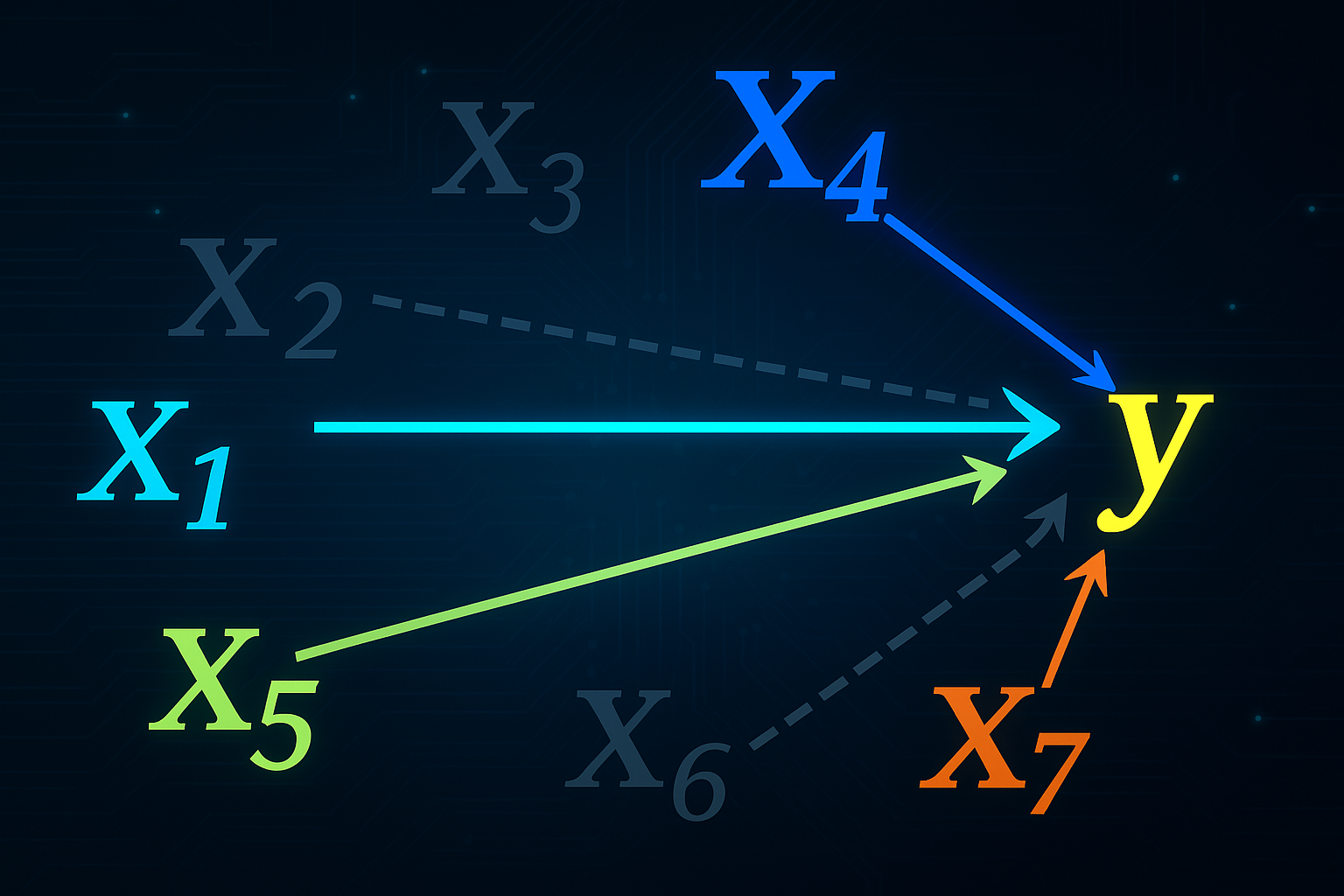

Machine learning models are known to be excellent for many applications, but not all forecasting settings can be said to belong in that group. Why is that? A machine learning model generally requires 5 000 – 10 000 observations to provide optimal results. However, when forecasting, you often have just 50 – 500 observations. In these settings, econometric models based on statistical-learning techniques can often provide better results. These models are tuned to only pick up on patterns and trends that actually improve forecast accuracy, sidestepping the need for larger amounts of data.

2. Machine-learning models can be unstable and prone to overfitting.

Overfitting can occur if the data used to train the model is too specific to that time period. This will create a model that will have a good in-sample fit, but will not perform well out of sample. You can say that the model is really good at describing the past as a whole, but not able to recognize individual patterns and events. This can lead to inaccurate predictions if the future that is to be predictive is not very similar to past developments. It is key to evaluate out-of-sample performance, not just in-sample performance. The performance of a model might look exemplary on the dataset it has been trained on, but you only see its actual performance when you test it on a new set of data. As machine learning models are more complex given the larger number of parameters than their statistical counterparts, they are also more prone to overfitting.

How can you avoid overfitting your forecast models?

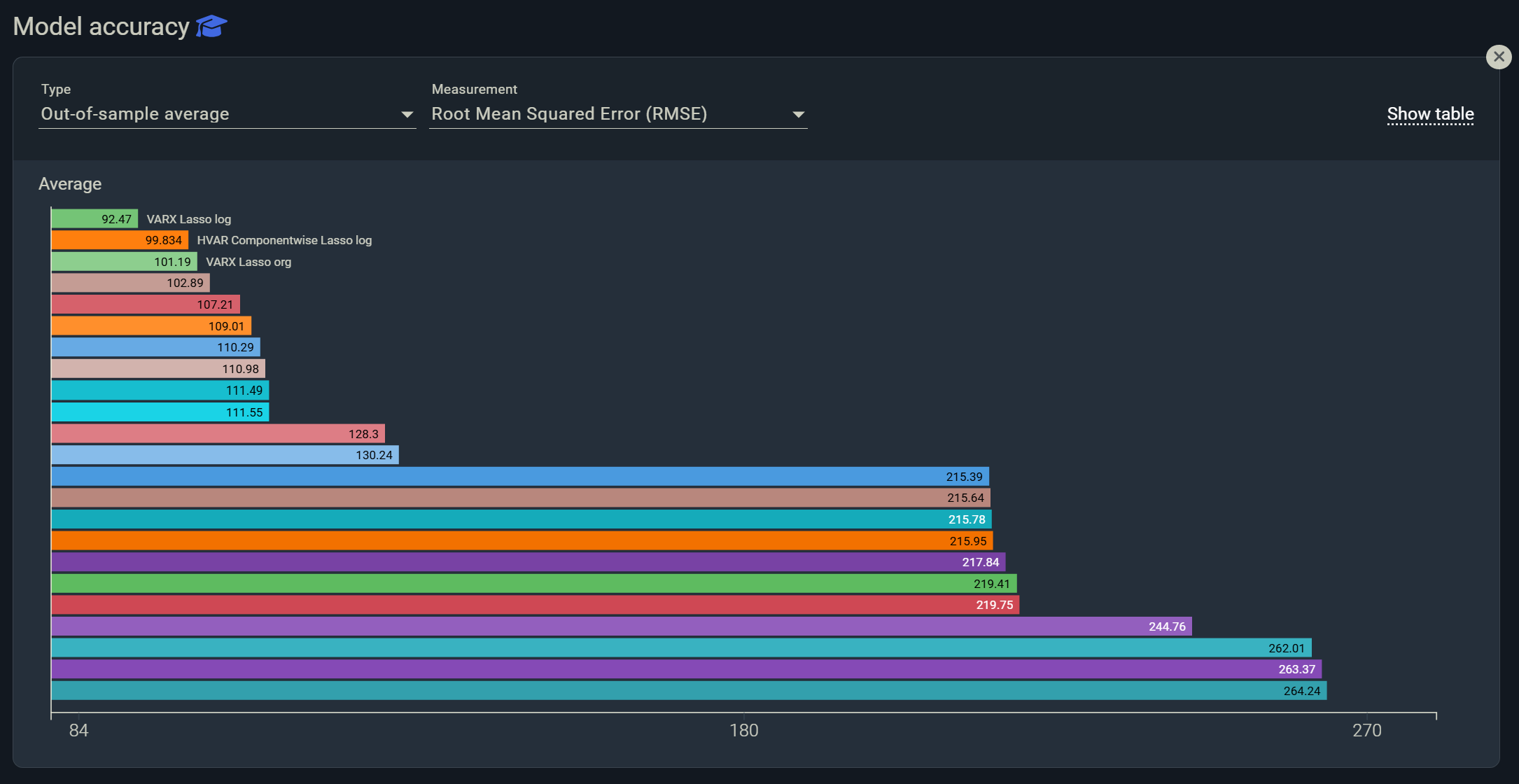

One way is by automatically testing a whole range of statistical models like variations of VAR and VECM (to list a few examples) and weighting them all together based on their out-of-sample performance. This is how you can ensure the numbers generated are unaffected by subjectivity. Employing Lasso and other regularization techniques and tuning it through cross-validation is another way to minimize variance and avoid overfitting.

3. Models can be expensive to build and maintain

Building a machine-learning model can be expensive, especially if it needs to be custom-built for your specific data. In addition, you may need to hire a data scientist or machine-learning expert to maintain and update your model as your data changes.

Machine learning is self-sufficient, but it is prone to errors. Assume you train a model with data sets that are too small to allow the model to capture trends and patterns that are general enough. As a result of this, a machine learning model may interpret the final observations before the forecast wrongly. Ultimately, you run the risk of getting forecast results that are biased, implicating business decisions that are dependent on these figures.

Such miscalculations can set off a chain of errors that can go undetected for extended periods of time. When they finally come to light, it will take a long time (and effort) to identify the source of the problem, and even longer to correct it.

Want to be notified of the latest articles? or subscribe to our newsletter Follow us on Linkedin or subscribe to our newsletter